Create Power Apps Collections Over 2000 Rows With These 4 Tricks

Power Apps collections are limited to a size of 2,000 records unless you know the special techniques needed to go past that number. This is because the ClearCollect and Collect functions can only return as many records as the delegation limit allows. Most times it is preferable to read data directly from a datasource like SharePoint for performance reasons but other times you need to get all of your data into a collection for table-shaping purposes or to enable offline-mode. In this article I will show you how to create Power Apps collections with over 2,000 rows.

Double Maximum Collection Size To 4,000 Rows

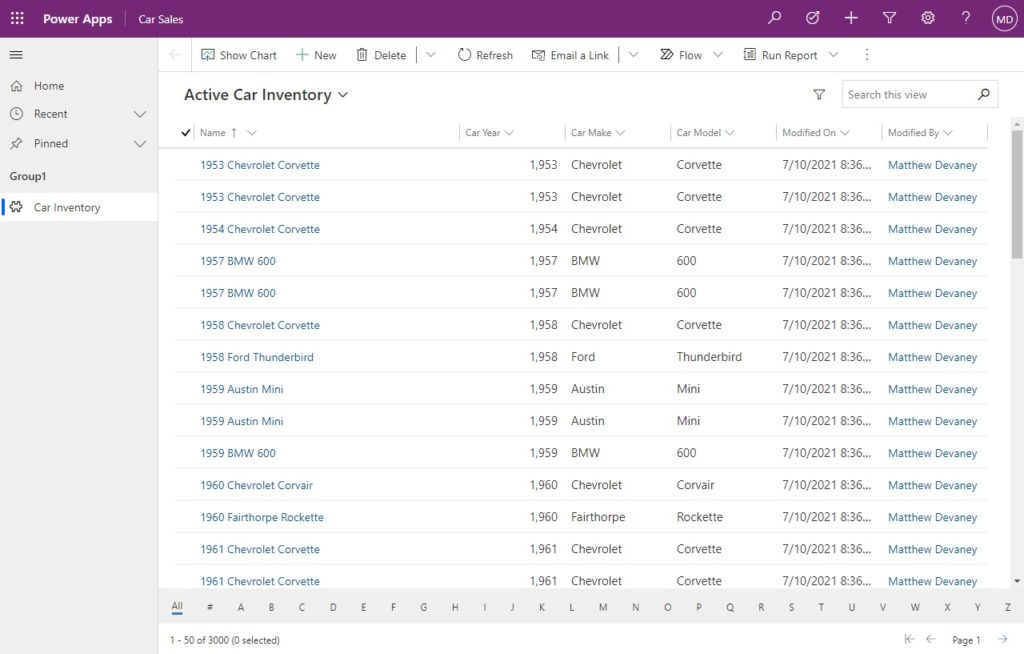

Power Apps can only load 2,000 records into a collection using the ClearCollect function but one of my fellow super users on the community forums @Drrickryp came up with a simple technique to double the limit. Here’s an example of how it works: the SharePoint list below called Car Inventory has 3,000 records.

| ID | Title | CarYear | CarMake | CarModel |

| 1 | 2009 Dodge Ram | 2009 | Dodge | Ram |

| 2 | 2013 Honda Accord | 2013 | Honda | Accord |

| 3 | 2012 Ford Focus | 2012 | Ford | Focus |

| 4 | 2016 Toyota Corrola | 2016 | Toyota | Corrola |

| 5 | 2018 Chevrolet Silverado | 2018 | Chevrolet | Silverado |

| … | … | … | … | … |

| 3000 | 2015 Nissan Sentra | 2015 | Nissan | Sentra |

We can use this Power Apps code in the OnSelect property of a button to load 2 temporary collections with data from SharePoint list into a single collection and then remove then duplicates. This code works because the row limit for ClearCollect only applies when loading data from a datasource, not local data in memory. When we check the row count for the colCars collection we see that it contains all 3,000 records!

Concurrent(

ClearCollect(

colCarsChunk1,

Sort('Car Inventory', ID, Ascending)

),

ClearCollect(

colCarsChunk2,

Sort('Car Inventory', ID, Descending)

)

);

ClearCollect(

colCars,

colCarsChunk1,

Filter(colCarsChunk2, Not(ID in colCarsChunk1.ID))

);

Clear(colCarsChunk1);

Clear(colCarsChunk2);Code language: JavaScript (javascript)

Collect Data In Chunks With For All Loops

A ForAll function can be used to collect several sets of rows from a datasource that match a list of supplied values. The only limitation is each individual set cannot exceed 2,000 rows. For example, using the Car Inventory SharePoint list and the ForAll function as shown below I can store all rows where the CarMake equals Ford, Dodge or Toyota in a single collection. The rows collected for Ford, Dodge or Toyota cannot be greater than 2,000 rows for each CarMake but the total size of the collection can exceed 2,000 rows.

Clear(colCars);

ForAll(

["Ford", "Dodge", "Toyota"],

Collect(colCars, Filter('Car Inventory', CarMake=Value))

);Code language: JavaScript (javascript)

This technique is also comes in handy when working with combo boxes. We might have chosen to build an app that allows the user to select multiple car makes just like this.

We can make the following changes to our code below and now it will collect all of the results based on values selected by the user in the combo box.

Clear(colCars);

ForAll(

ComboBox1.SelectedItems.Value,

Collect(colCars, Filter('Car Inventory', CarMake=Value))

);Code language: JavaScript (javascript)

Get A JSON Response From Power Automate

A Power Automate flow can return over 2,000 records to Power Apps with the HTTP Response action. This technique was pioneer by Power Platform Program Manager Brian Dang. It uses a premium action in the flow so only users with a per app plan or a per user plan will be able to take advantage of it.

Assuming we are using Dataverse instead of a SharePoint list to store the car inventory data…

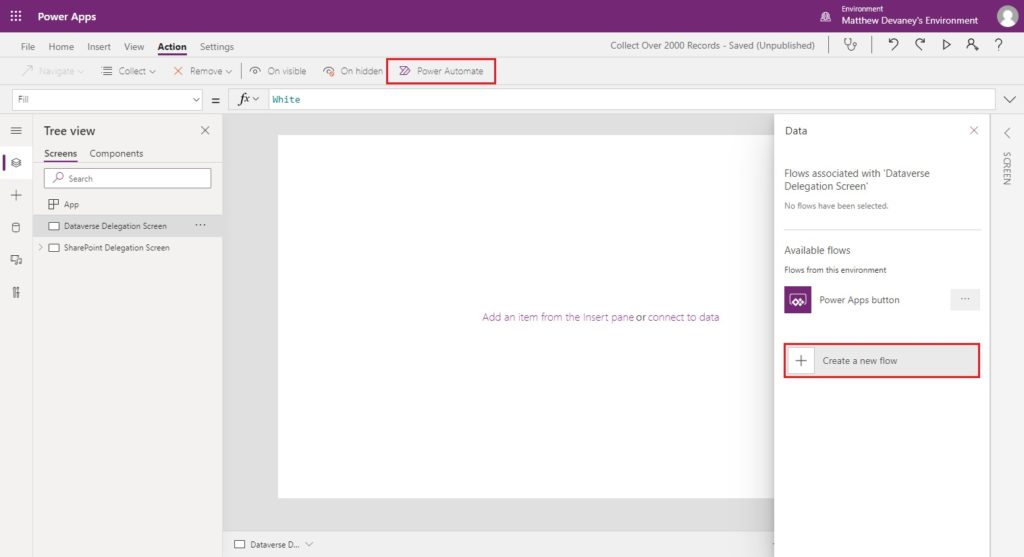

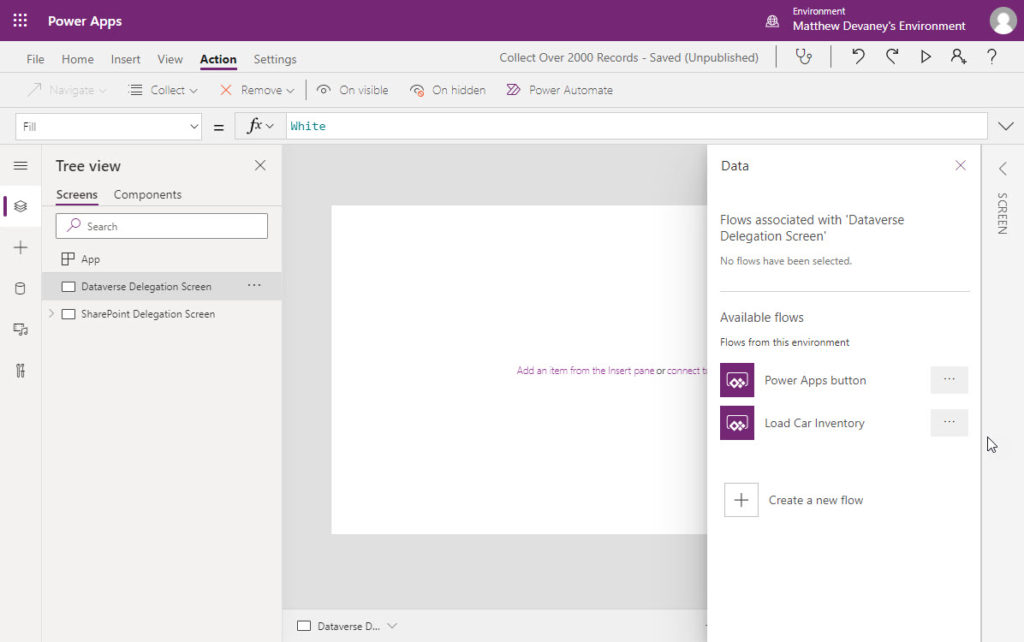

…create a new app, go to the Action tab and select Power Automate. Then create a new flow.

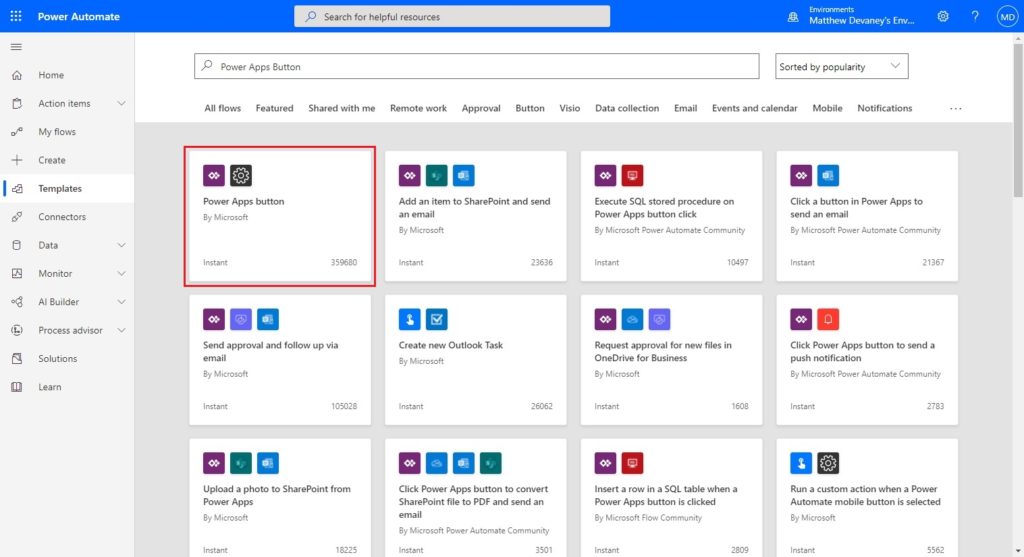

Choose the Power Apps button template.

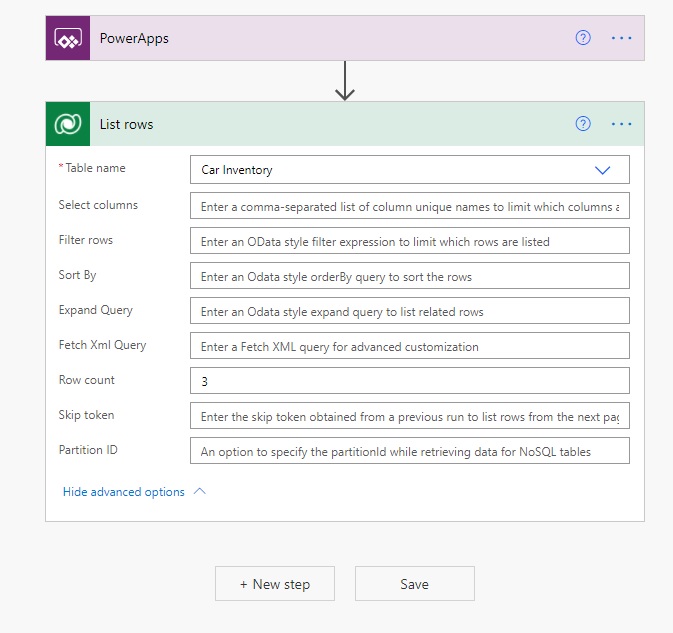

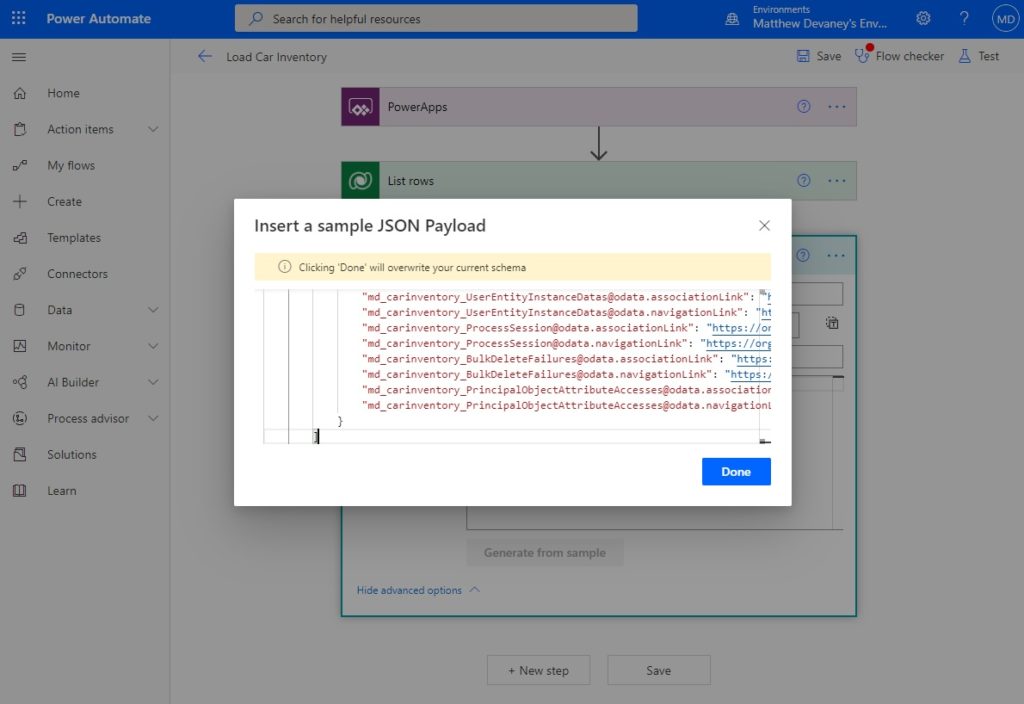

Name the flow Load Car Inventory. Then add a Dataverse – List Rows action. Set the table name to Car Inventory and update the Row Count to 3.

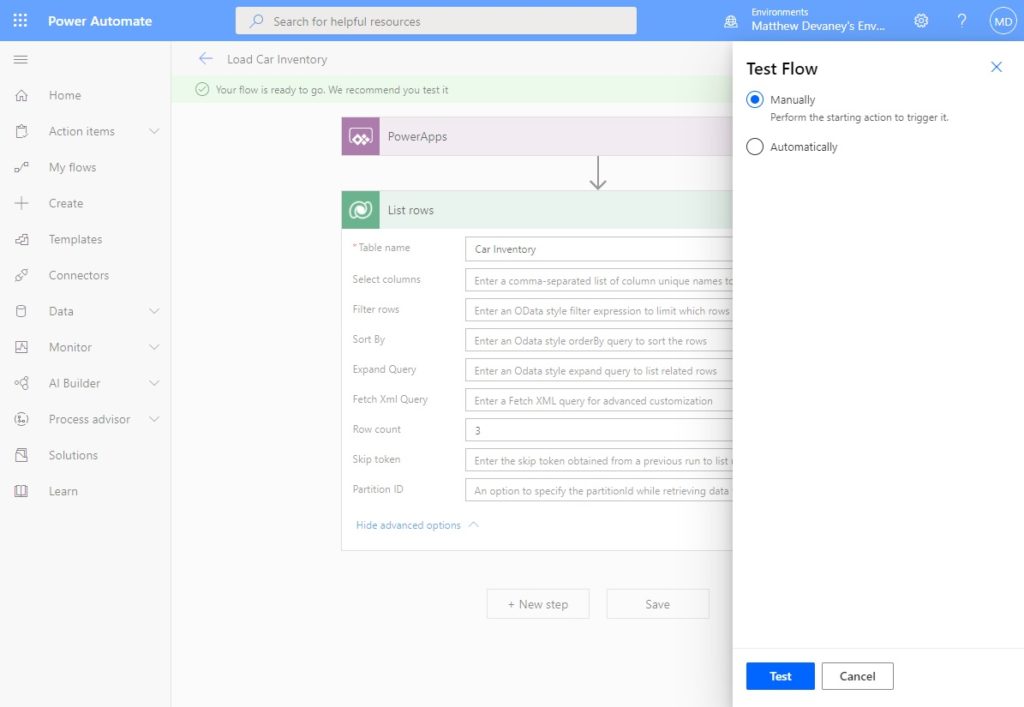

Save and test the flow manually.

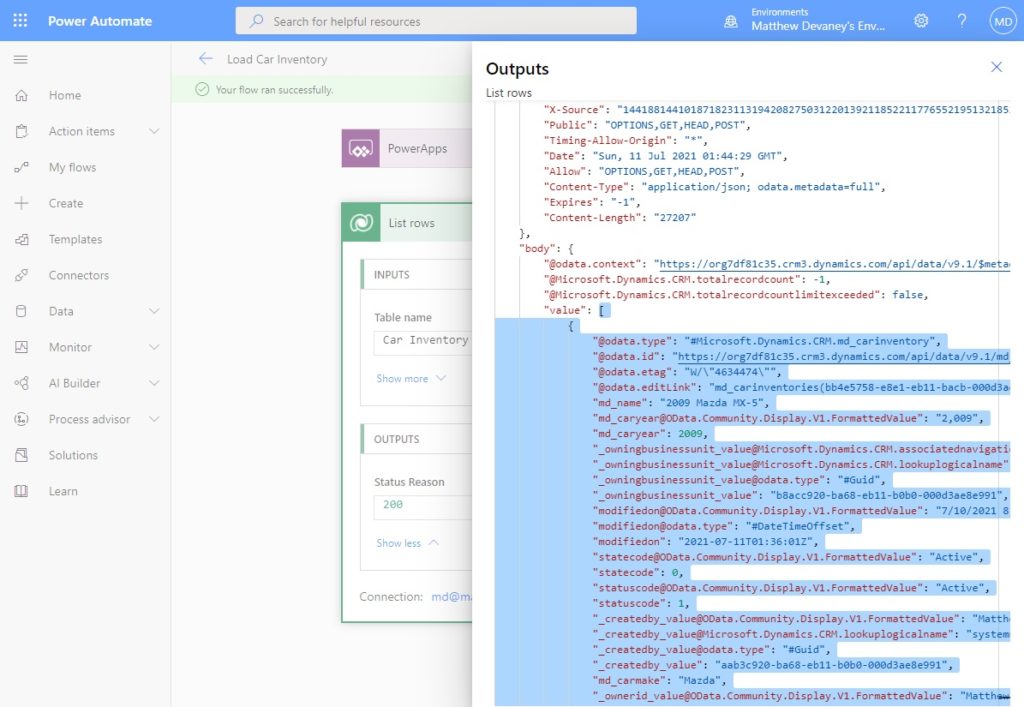

Get the raw outputs for the list rows action. Open the notepad application in Windows and copy the list of objects in the value property (highlighted below) into it. Then close the Outputs menu and click the Edit button to re-open the flow in edit mode.

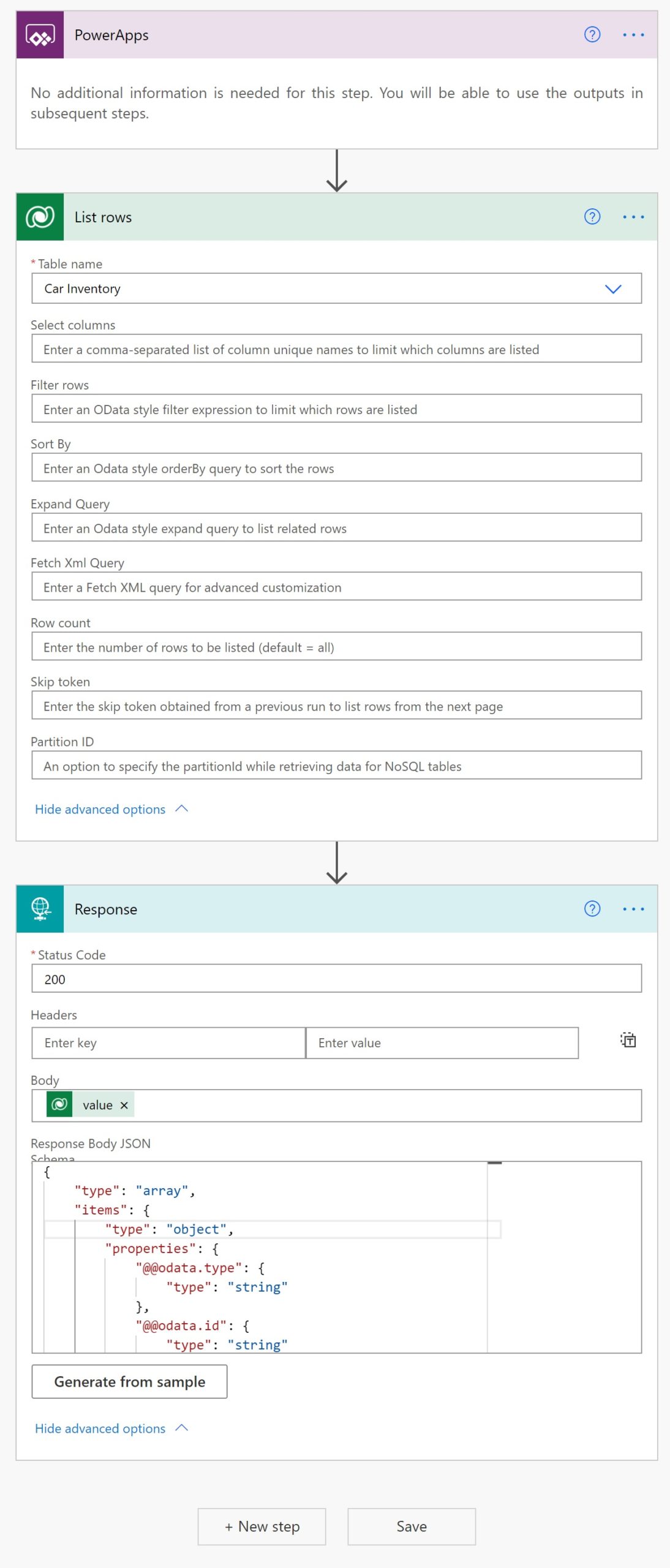

Remove the row count from the Dataverse – List Rows action. Next, add a Response action with the value of List Rows as the body. Click generate from sample…

…and copy + paste the list of objects we temporarily stored in notepad into the pop-up menu that appears. Select done then Save the flow.

Go back to Power Apps Studio and choose the Load Car Inventory flow to connect it to the app.

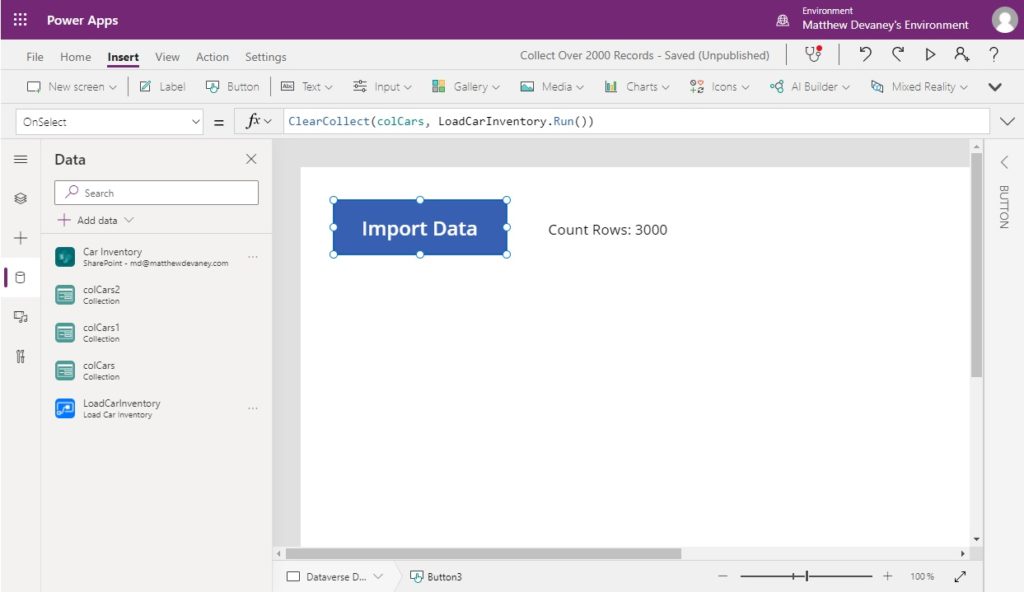

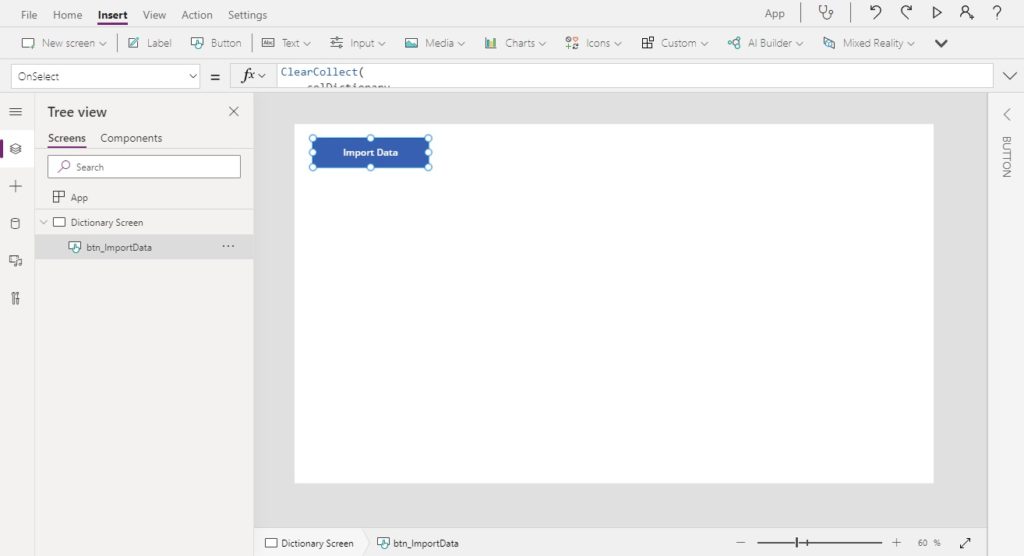

Create a new button with the text “Import Data”…

…and use this code in the OnSelect property. Press the button in play mode and it will collect all 3,000 rows from Dataverse even though the delegation limit is only 2,000 rows.

ClearCollect(colCars, LoadCarInventory.Run())Code language: CSS (css)

To check the size of the collection make a label and put this code in the text property.

"Count Rows: "&CountRows(colCars)Code language: JavaScript (javascript)

Add Static Data With The Import From Excel Connector (100,000+ Rows)

The import from excel connector loads data from an Excel spreadsheet directly into an app. Once the Excel spreadsheet is loaded into the app it cannot be edited without being re-imported by the app-maker. Therefore, static data only makes sense when the data is not expected to be changed. Some examples are:

- Localized text in multi-language apps

- A list of valid postal codes

- Words found in a dictionary

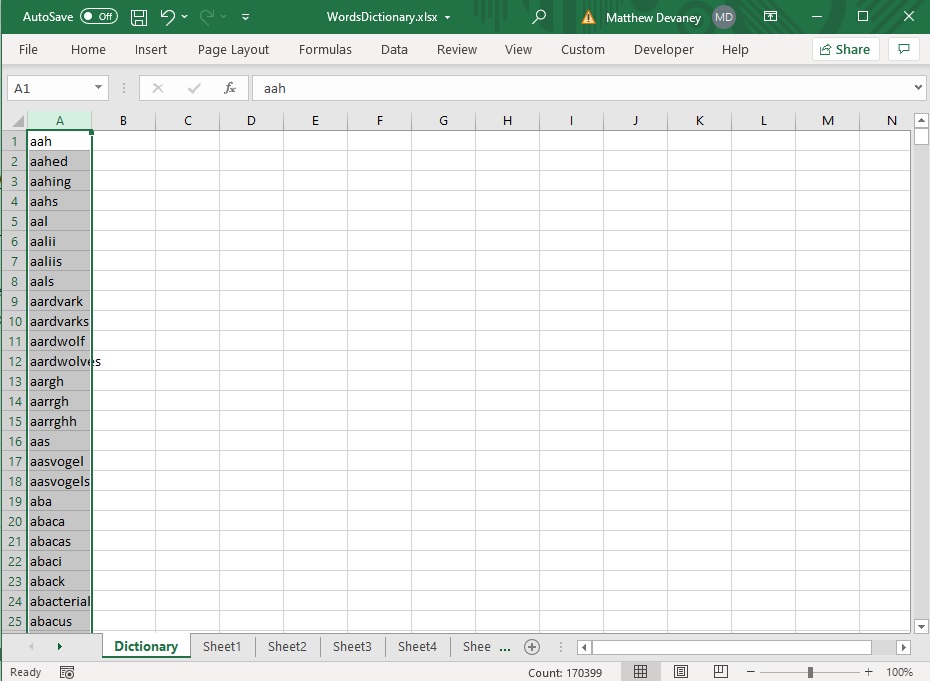

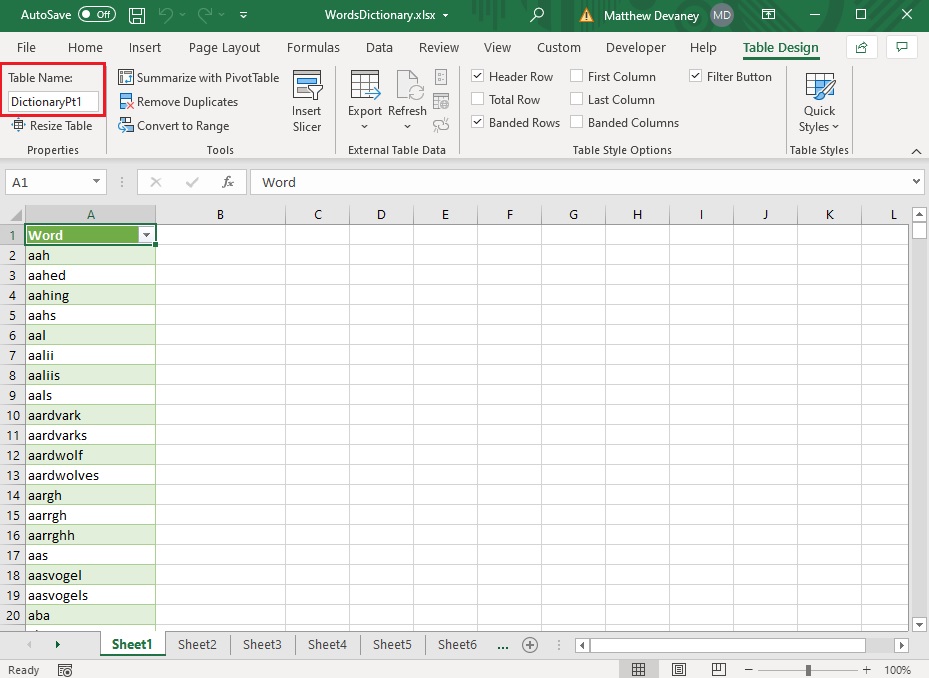

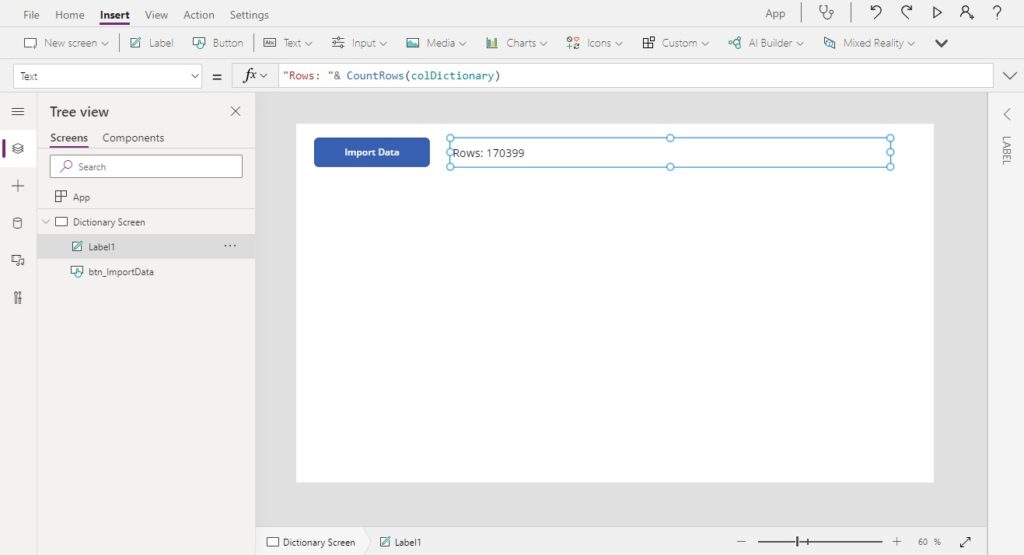

For this example, we’ll use a list of 170,399 words found in the Scrabble dictionary.

Group the list of words into Excel tables each with 15,000 records or less. This is important because Power Apps cannot read more than 15,000 rows in a table. Give the Excel tables you create a unique name.

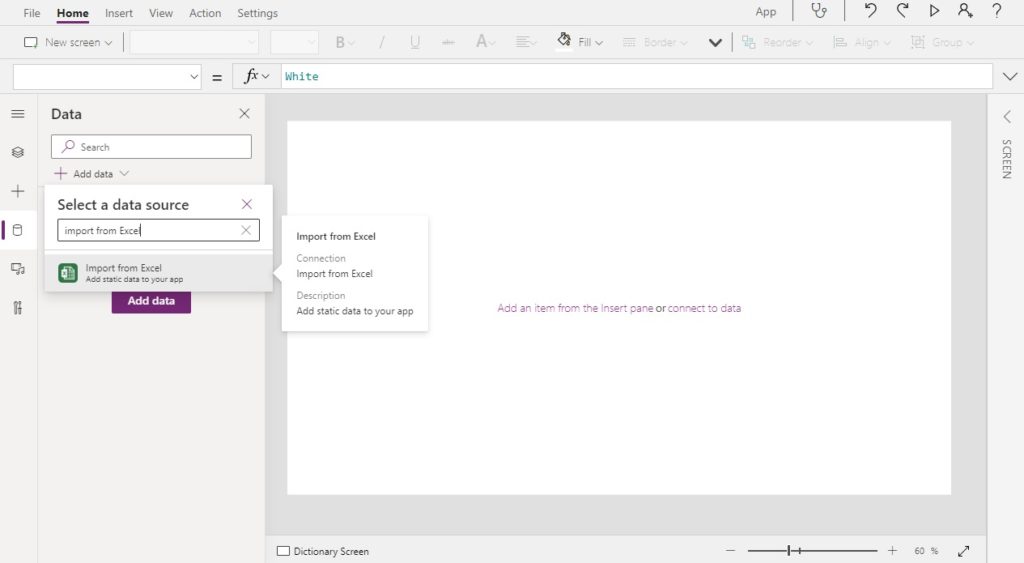

Open Power Apps Studio and add the Import from Excel datasource.

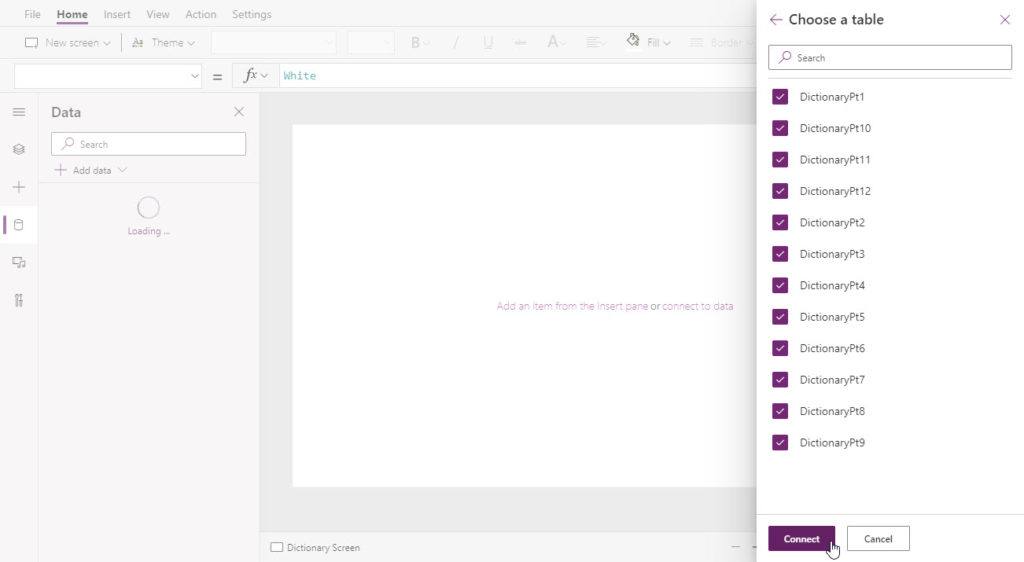

Check all of the Excel tables we created and click Connect.

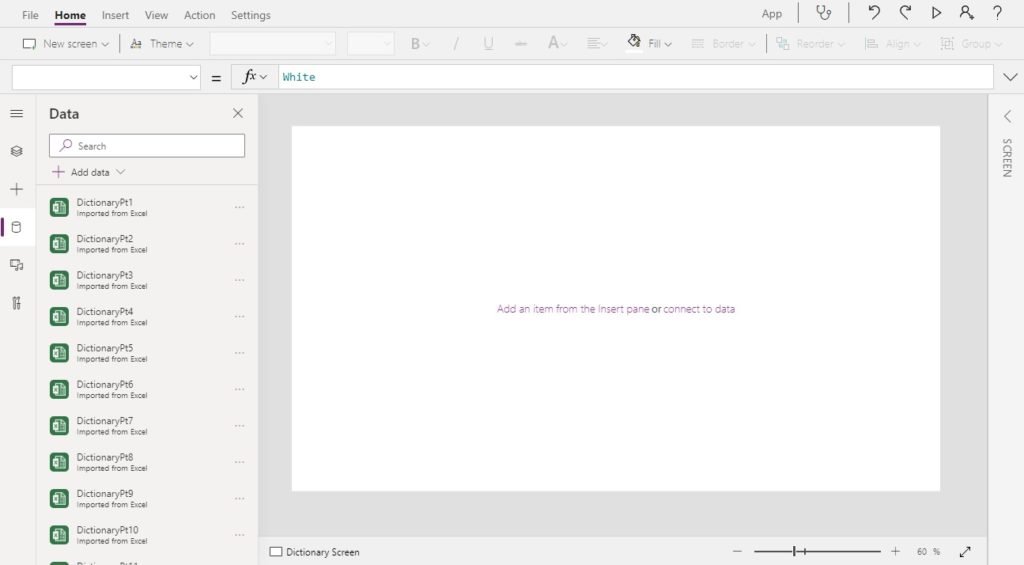

All of the Excel tables will appear as individual datasources.

Create a button with the text “Import Data”…

…then use this code in the OnSelect property to collect all of the individual Excel tables into a single collection.

ClearCollect(

colDictionary,

DictionaryPt1,

DictionaryPt2,

DictionaryPt3,

DictionaryPt4,

DictionaryPt5,

DictionaryPt6,

DictionaryPt7,

DictionaryPt8,

DictionaryPt9,

DictionaryPt10,

DictionaryPt11,

DictionaryPt12

)

Make a label beside the button and use this code to display the row count of the collection.

"Count Rows: "&CountRows(colDictionary)Code language: JavaScript (javascript)

Then press the button and wait for the collection to load the data. Once its finished we can see that all 170,399 rows were successfully loaded into the collection.

Did You Enjoy This Article? 😺

Subscribe to get new Copilot Studio articles sent to your inbox each week for FREE

Questions?

If you have any questions or feedback about Create Power Apps Collections Over 2000 Rows With These 4 Tricks please leave a message in the comments section below. You can post using your email address and are not required to create an account to join the discussion.

Great article. Thank you so much Matthew.

Necdet,

It always makes me smile to see a comment from you. Thank you for leaving one!

Great posts! Always learn a lot at here.

Thanks PandaLord. Love the name!

Great tips mate, and here’s a Tip #5 , using Sequence() 🙂

https://powerusers.microsoft.com/t5/Power-Apps-Community-Blog/The-power-of-Sequence-when-used-to-collect-gt-2000-records-into/ba-p/819453

Although, I’ve heard this method is quite slow on very large Data sets due to not using Concurrent(). It’d be great to work that into it …

Eddie,

Good to hear from you my friend, thanks for sharing 🙂

Could you please explain to me how the IDNumber field gets populated initially? I am also keen to know if this method could work for a SharePoint holding 7,000 rows.

Hey Matt,

So the IDNumber field is populated as each new List item is added. There are a number of ways to do that (as I’m sure you are aware 🙂 ) but I’d probably

If you need to Retrofit an IDNumber then this would need to be done via Flow or I’ve even seen WarrenBelz do it via MS Access – it has a 2-way link to SharePoint Lists!

And yes, this will work on a 7,000 item List. It’ll actually work on any size list but as I said the larger the list the slower it’ll go, unfortunately.

Eddie,

Yes, its true. I could patch the IDNumber field just like you have proposed.

With({varRecordPatch: Patch(SPDatasource, Defaults(SPDatasource), {Field: “SomeText1”})}, Patch(SPDatasource, varRecordPatch, {IDNumber: varRecordPatch.ID}))

I was considering including a similar method in my article but decided I didn’t want to do a technique where it was necessary patch twice. But its a perfectly valid way of doing things. Maybe your advice will tip me over the edge and I’ll give it a shot… again, thanks for taking the time to comment. I’m pretty sure other readers will find your work helpful!

Hi Matthew,

Thanks for your sharing.

I have a question about import data from Excel.

I create three sheets in Excel, “Year108″,”Year109” and “Year1091”.

There are 2362 records in “Year1091″, but when I import data to PowerAPP, it’s only 2000 records be imported as the attached.

Please tell me how to fix it. Thanks a lot !!

Younger,

Two things to look at:

#1 Did you use the “Import From Excel” Connector? We must use this connector and not the “OneDrive” connector

#2 Import from Excel supports 15,000 rows per table. Its OK to try putting all of this data in 1 table.

Hi Matthew,

Thanks for your reminder.

It’s working now.

Thanks a lot !!

Hi Younger,

“Import from excel” data is stored our local machine right. But my data will store online and everybody will see my data.

Venkat,

Import from Excel data is stored in the cloud. But it is static data. You cannot so CRUD operations on it through the app.

Hi Matthew,

I’m trying to implement Getting a JSON response from Power Automate but when I monitor the app, I get the error:

JSON parsing error, expected ‘object’ but got ‘array’.

My schema starts as:

{

“type”: “array”,

“items”: {

“type”: “object”,

“properties”: {

“ItemInternalId”: {

“type”: “string”

},

“comp_id”: {

“type”: “string”

},

“client_no”: {

“type”: “integer”

}

…

It’s pulling the data in an SQL Get Rows step and the Response step is using ‘value’ from the Get Rows step.

Duncan,

This is a tough one to diagnose because I don’t have access to your data. But I noticed your schema is not a JSON object. Perhaps you could try wrapping it between {} brackets.

{

{

“type”: “array”,

“items”: {

“type”: “object”,

“properties”: {

“ItemInternalId”: {

“type”: “string”

},

“comp_id”: {

“type”: “string”

},

“client_no”: {

“type”: “integer”

}

}

After much messing about, I removed and re-added the Flow within Power Apps and that fixed it!

It’s annoying as I’ve had a similar issue previously but didn’t think to do that.

Best site ever to learn powerapps stuff. Congrats.

Dear Matthew, I’m just wondering what’s the best way to handle two different data sets with around 2500 records each, let’s call it: tbl_1 and tbl_2.

I just need to validate information stored in tbl_2 for each record in tbl_1.

Process can be done using some hints in this article, but takes long time (+15 mins).

Note: IDs for both tables are unique and can be looked up or filtered. Sources are both views, so I can’t patch.

Hope there is an elegant solution for this.

Gus,

15 minutes is a crazy-long amount of time to load… yikes! Can you improve performance by limiting the columns which get downloaded with the SHOWCOLUMNS function? I have a feeling your tables are very wide.

Tx Matt,

I figured out to reduce time to 3 mins before reading your advice.

However, I haven’t thought about the number of columns. I confirm your feeling that both tbls are very wide.

I’m gonna try calling only the specific columns needed for the solution.

I would like to know if it is a much better way to deal with collections using the ‘With’ function. When working with large data performance is key specially when we need bulk updates. It could be very interesting to see if you can make an article dealing with that and comparing the clearcollect function vs with function.

Tx a lot for your advice.

Hi Matthew,

In the 4000 rows section, I figured out a way which responds at ‘warp speed’ (1 second) to my 2500+ rows wide list.

I did use a combination of With, ClearCollect, Filter & ShowColumns (limiting the columns).

It is around 3x faster than the ClearCollect solution alone.

I was inspired by a mix of ideas from Warren Belz & your suggestion.

With(

{

wAsc:

Sort(MyList;ID);

wDesc:

Sort(MyList;ID;Descending)

}

;

ClearCollect(

MyCollection;

ShowColumns(

wAsc;

“ID”;”Colum2″;”Colum3″

);

Filter(

ShowColumns(

wDesc;

“ID”;”Colum2″;”Colum3″

);

!(ID in wAsc.ID)

)

)

)

Gus,

I’m always up for hearing about performance improvements. This one awesome! Thank you so much for sharing 🙂

Thank you, Gus and Matthew

I am interested in hearing more about performance improvements. I am currently having quite a latency in one of my gallery controls. Here are some

App settings are set to 2k data rows and more than 4k records in the dataverse table.

Gallery code:

Search(

Filter(

collectionDatasource,

‘ID’ in Collection1.’ID’ && ‘ID’ in Collection2.’ID’

&& ‘ID’ in Collection3.’ID’

),

DataverseFieldName.Text,

“field_1″,”field_2”

)

What would be a good way to reduce latency drastically? Would you change the gallery code or implement other workarounds such as JSON flow and paginated gallery?

Thank you

MDC,

Connecting directly to the datasource will improve performance since it only retrieves 100 records at a time. If the user does not scroll past record 100, the 101st records won’t be retrieved.

Yes, you could also try paginating your gallery and reducing the number of controls in the gallery as well. Try to reduce the number of calculations going on too if you have any.

HI, can I add this code on, OnStart of the app? because I want to load the data when the AppStarts..

?? Help

Mathew thanks for your help. Its working now my app with more than 2000 values.

Thanks!!!!

Which option did you use?

JC,

I’ve used all of these options before. I prefer #2 – Collect Data In Chunks With For All Loops.

How to create a browse screen based on the search function and to give hit only for those search items.

Hari,

Please check out this article I wrote on designing a search function that is delegation-friendly:

https://www.matthewdevaney.com/power-apps-search-function-delegation-warning-workarounds/

I’m trying to use the JSON response back to PowerApps into a collection, but none of the items are ever collected. If I look at the collection I can see it was formatted to the correct fields, just no data. This is on files in a doc library.

Craig,

Do you get any errors? What shows up in Power Apps monitor? I’ve only tested this method on a SharePoint list, not a document library.

This is great, thanks for sharing. I’ve a doubt,

I tried Tip #3 via Power Automate, however I was not able to retrieve all rows from my data source only 2048 oppose to 5000+ rows. Could you help me with this? Data Source I’m using is SQL Server.

Thank you!

Ishta,

Is the problem…

1. The flow action to get SQL records returns 5000+ rows and the response sent to Power Apps is 2048. If this is the case how did you validate the action returned all 5000+ rows?

Or is it..

2. The flow action to get SQL records only contains 2048. If this is true, can you go to advanced settings in the flow action and increase the pagination limit.

I have the same problem as Ishta. SQL ‘get rows’ action returns 2048 records. Any tips?

Tomas,

Did you try enabling the pagination setting like I said above?

This works for me! Thanks!

Well, I am also facing this issue, Do I have to set the pagination limit to the number of records I have in SQL ?

Mustafa,

Not sure. I’ve never made a SQL based Power Apps app.

This is fantastic, I have been beating my head against the 2000 record limit for awhile now. I have an approximately 17,000 record AD to search and can’t get them to enable MSGraph. Now to find out just how many rows it takes to break the collection object 🙂

Six,

It depends. On q desktop computer it would take alot. On a tablet or phone device it will take less records. Then you must also factor in any throttling due to API limits being reached.

I can’t add more than 1 Excel Table to my collection formula, it gives me an error. I imported like 20 tables but cant select them all, only 1 at a time in the formula

any help?

M Som,

I am unclear about what you are asking. All of the methods above involve operating on a single table.

Hi Matthew,

Thanks for the entire topics , it helped me a lot !

I have one query , if we are using SP as Datasource and even after applying filters and all the datasource size exceeds the 2000 limit , the best approach is to go with PowerAutomate option stated above.

Ajit,

I suggest trying these approaches in order. If you use Power Automate’s response action its going to require a premium license.

1. Double Maximum Collection Size To 4,000 Rows

2. Collect Data In Chunks With For All Loops

3. Get A JSON Response From Power Automate

You may also wish to try the approach of using the ParseJSON function + Power Automate described here by Reza Dorrani. It’s a new function that could likely do the trick.

https://www.youtube.com/watch?v=FqfLiJDdC3Q

Thanks Matthew !

Please help me to understand further –

To hold data where the count will grow in future as well.

I believe 3 .Get A JSON Response From Power Automate is perfect however need premium license.

Your taught on article Power Apps Delegation – SharePoint – Practical Power Apps Section – “Newest xxxx records”.

I will definitely go through Reza’s video.

Ajit,

Yes, the JSON Response method can only be done with a premium license. I recently learned of a new way to do it with the experimental ParseJSON function for free. I’ll be posting about that in the coming month ahead.

Ajit,

I’ve now posted the new article. If you are a subscriber you will have received it in your inbox.

Thanks Matthew and I believe we can apply oData filter to get the matching 5000 records from the list if the count is more.

Great and helpful Article Thanks so much

Peter,

You’re welcome. I’m glad it helped.

I have a question about the lookup function

I used the lookup function to provide staff name when ID is typed it worked now i want to provide ID when name is entered and its giving me a circular reference error

how do i handle this?

Peter,

I’m not sure how this is related to the article I posted. Please let me know.

Hi, Mathew well I have a SQL table with over 5000+ rows and I want to load all the data into a collection for aggregation and group by purpose in powerApps

But I am not able to do with this approach:

Concurrent( ClearCollect( colCarsChunk1, Sort('Car Inventory', ID, Ascending) ), ClearCollect( colCarsChunk2, Sort('Car Inventory', ID, Descending) ) ); ClearCollect( colCars, colCarsChunk1, Filter(colCarsChunk2, Not(ID in colCarsChunk1.ID)) ); Clear(colCarsChunk1); Clear(colCarsChunk2);its only showing 1000 (row count) which is wrong.

where I am doing wrong please tell me?

Mustafa,

You must increase the delegation limit in Advanced Settings to 2,000 rows. Even then, this method will only return 4,000 rows maximum.

i imported a static Excel book with two sheets of 12,000 records each but on writing this code it keeps highlighting the second table and the collection is not created what do i do

ClearCollect(

AlphaAll,

ListAlp1,

ListAlp2,

);

Olabode,

Please send the error message you received. It is needed to diagnose.

Also, in the code you sent there is an extra comment at the end.

This was great, but I ran into a problem. I imported 6 excel tables and created a collection that populates my gallery. However, when using a text input to search that gallery there is significant delay when I clear the text input to type a new search term. Is there any solution to this?

Jeff,

Set the DelayOutput property of the search text input to true. That way your gallery which references the text box won’t recalculate until you stop typing.

Hi,

I have a sharepoint list which has more than 5000 records, and I am using power automate to get the records into my app, but in the app I have an operation where I update the existing records by doing a lookup to sharepoint list directly and also use the filter condition for records which are created today for the lookup and then do my update.

Will this work for 5000 or more records?

Is there any other way?

Very useful, thank you so much.

I did it this way by dividing the Excel tables, but when creating the collection, it considers the delegation limit, returning 6,000 rows and not the 45,000 that it should return.Fiz dessa forma dividindo as tabelas do Excel, mas ao criar a coleção, ela considera o limite de delegação, retornando 6.000 linhas e não as 45.000 que deveria retornar.

Matheus,

Excel tables imported as static data do not have a delegation limit.